Gemini Live will soon make suggestions for you in real-world situations

It's getting smarter, not just with processing text and images.

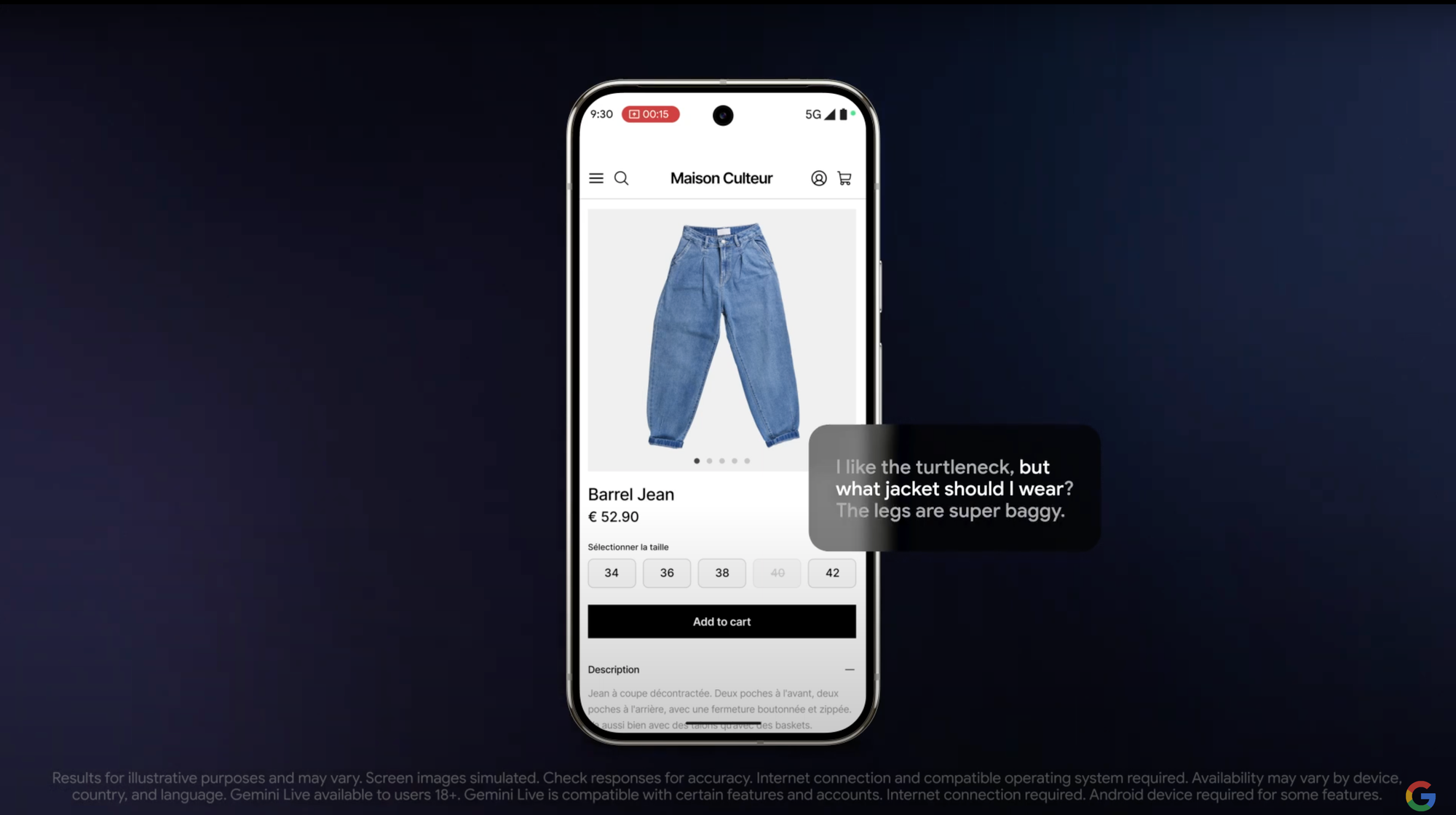

Imagine you’re shopping online, unsure whether that jacket actually suits your style. Instead of texting a friend for advice, you simply point your camera at the screen, and an AI instantly analyzes the fit, the colours, and even suggests alternatives.

Or maybe you’re rearranging your living room, and instead of scrolling through Pinterest for ideas, you just pan your phone around, and AI tells you exactly where to place that new lamp. That’s the kind of real-world AI assistant Google has been promising for nearly a year. And now, it’s finally happening.

At Mobile World Congress 2025, Google confirmed that later this month, Gemini Live—its AI chatbot—will support live video input on Android devices. That means you’ll be able to open your camera or share your screen and ask Gemini questions about what it sees, making it the most interactive version of Google’s AI yet.

The company first hinted at the feature during Google I/O 2024, when it introduced Project Astra, an experimental AI assistant designed to process video input in real time. Google’s vision for AI assistants was clear—one that could actively see and understand the world the way we do.

Now, Gemini is about to finally get better at understanding the world around you, not just processing text and images. Until now, Gemini’s video capabilities have been unreliable—sometimes summarizing YouTube videos, sometimes refusing to do so for no apparent reason. But this update changes that. With live video streaming, you’ll be able to show Gemini what you’re talking about instead of just describing it.

However, there’s a catch—this feature won’t be free. The new video capabilities will only be available to Gemini Advanced subscribers, meaning you’ll need to pay $20 per month for Google’s AI Premium plan to enjoy the feature.

At the I/O demo last year, the feature seemed seamless and futuristic, but that was a controlled environment. Will the real-world version be just as impressive?