Google DeepMind unveils Veo 2, a new challenger to OpenAI’s Sora

At its core, Veo 2 builds on the foundation of its predecessor Veo, which maxed out at 1080p resolution.

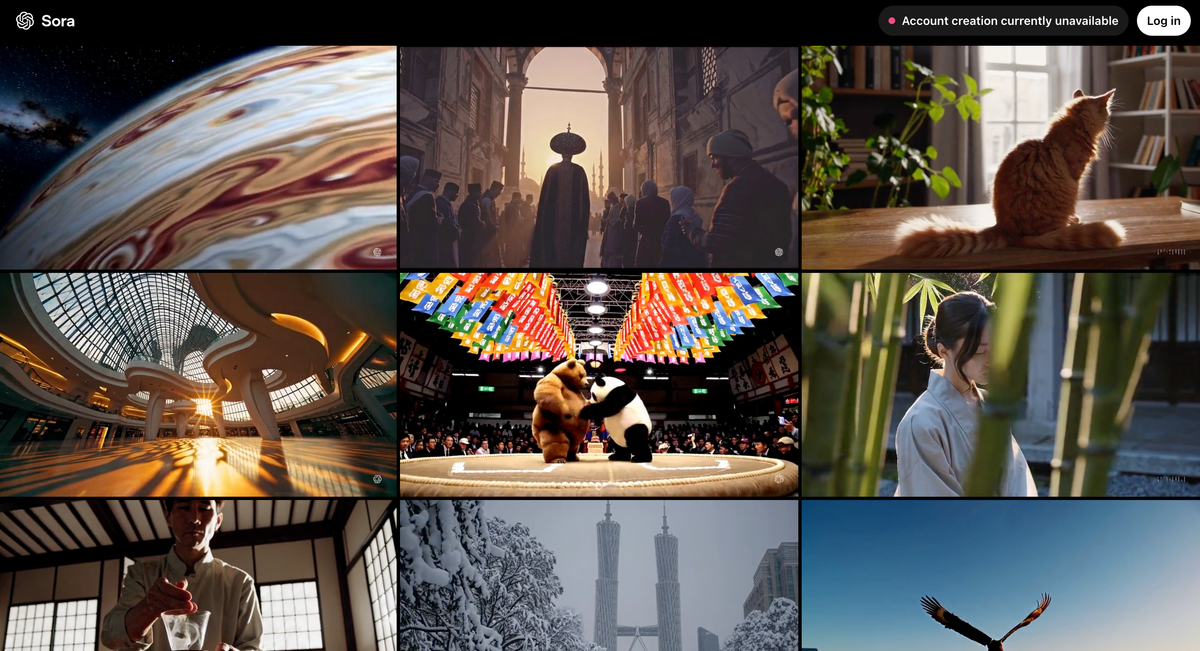

Just a week after OpenAI launched its much-hyped Sora video generator, Google DeepMind has fired back with Veo 2, its latest AI video model—and it’s already turning heads.

Capable of producing videos in up to 4K resolution, Veo 2 signals Google’s ambition to pull ahead in the increasingly competitive AI video race.

At its core, Veo 2 builds on the foundation of its predecessor Veo, which maxed out at 1080p resolution. The new model not only steps up the visuals but also introduces improvements in “camera control,” and enhanced physics, allowing users to generate everything from sweeping pans to cinematic close-ups with just a prompt according to Google.

According to the tech giant, the upgraded physics engine enables more realistic motion, fluid dynamics, and human expressions—key areas where AI video tools have historically struggled.

DeepMind claims Veo 2 outperforms its rivals in key areas. In user preference tests, Veo 2 reportedly outperformed Sora Turbo (OpenAI’s faster version of Sora), with 59% of human raters choosing Veo 2’s output. It also performed favourably against Meta’s Movie Gen and Minimax, slipping slightly below 50% only against China’s Kling v1.5. Google claims Veo 2’s improved understanding of physics, light refraction, and object motion gives it an edge.

But for now, these capabilities are more theoretical than practical. While it can theoretically generate two-minute clips in stunning 4K detail – four times the resolution and six times the duration Sora can handle, Veo 2 is currently only available in Google’s experimental VideoFX tool, where output is still capped at 720p resolution and eight-second clips.

OpenAI’s Sora, by comparison, currently generates up to 1080p resolution for clips up to 20 seconds. However, Google says it’s working to scale Veo 2’s features and will gradually expand access to longer, higher-resolution outputs.

Another challenge is that Veo 2, despite the upgraded physics engine still struggles with coherence in complex scenes, such as accurately capturing intricate gymnastics movements - a problem common to other AI video tools on the market such as OpenAI's Sora and Runway Gen-8 Alpha.

Meanwhile, to tackle misuse of the "very capable" tool, DeepMind claims that it uses invisible SynthID watermarks to tag Veo 2 outputs, helping identify AI-generated content.

However, one unresolved question surrounding Veo 2 is its training data. DeepMind hasn’t disclosed where the videos came from, though YouTube—a Google-owned platform—is a likely source.

For now, Veo 2 powers Google Labs’ VideoFX tool, which is rolling out to U.S. users on a waitlist. Alongside Veo 2, DeepMind has launched updates to its Imagen 3 text-to-image model, enhancing image quality, composition, and style adherence for its ImageFX tool available in over 100 countries.

While limitations remain, the advancements in realism, cinematic control, and scaling potential make it a strong contender in the AI video race.