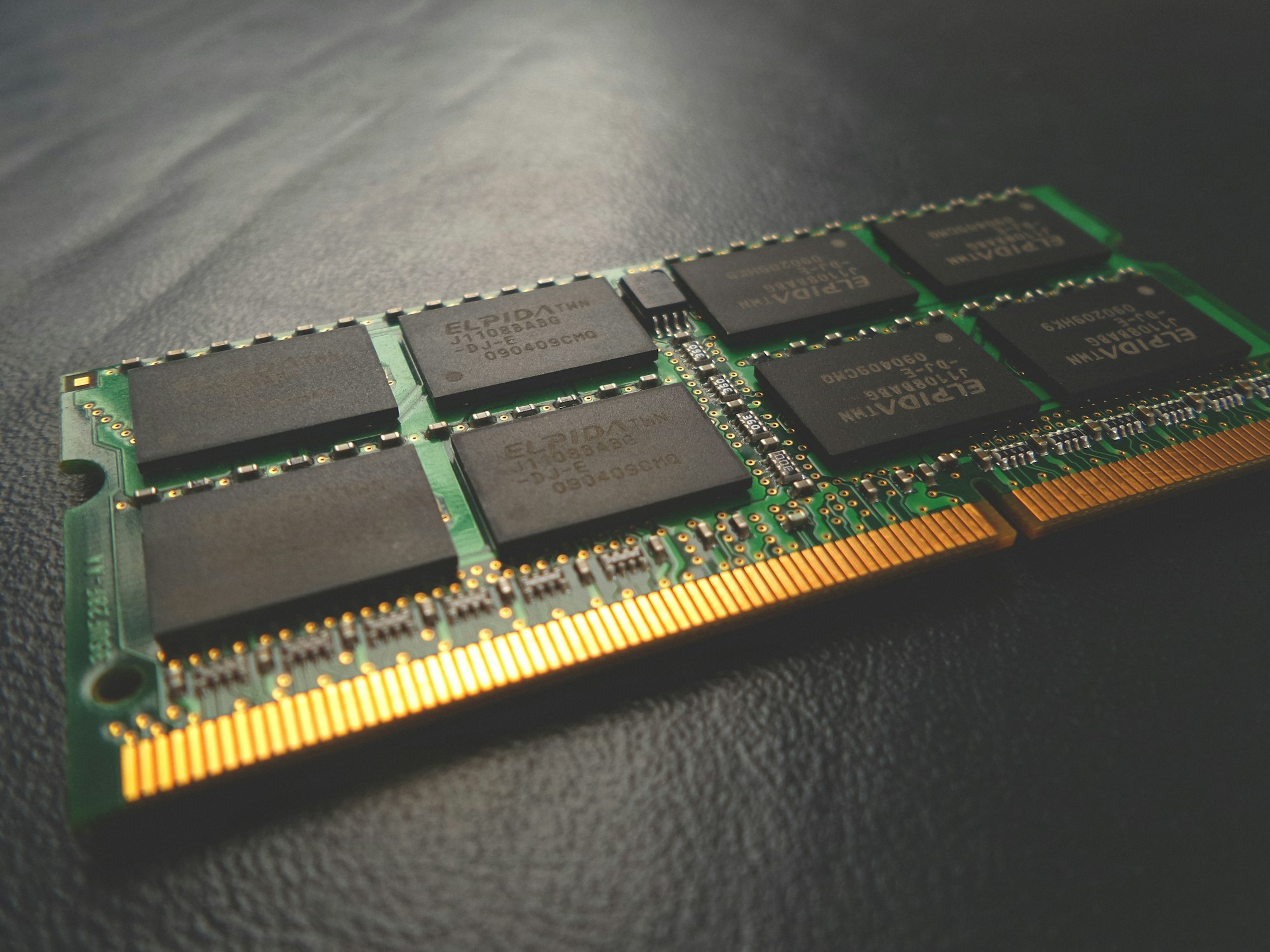

Meta is developing an in-house AI chip to train its AI model. Because, why not?

It could help it reduce reliance on other chip companies like Nvidia.

Think about this: why keep paying Nvidia billions of dollars when you can build your own chips? That's why Meta is said to be testing its first in-house AI training chip, which could mean less dependence on Nvidia’s powerful, and pricey, GPUs.

If it goes well, Meta could slash infrastructure costs and make its AI tools run even better on Facebook, Instagram, and WhatsApp.

This isn’t Meta’s first rodeo with AI hardware, but unlike its previous chips which were built for running AI, not training it, this one is designed for the heavy lifting. It’s also supposed to be more power-efficient than traditional GPUs, thanks to a specialized design. And Meta isn’t doing it alone—it’s working with TSMC, the same semiconductor giant that supplies Apple and Nvidia.

Meta’s AI obsession isn’t exactly a secret. The company is expected to spend up to $65 billion on infrastructure this year, with a massive chunk going toward AI. Just two years ago, Meta reportedly dropped over $10 billion on Nvidia GPUs alone. If this in-house chip plan works out, it could be a game-changer—especially since Nvidia currently dominates the AI hardware space.

Meta isn’t the only one moving away from off-the-shelf AI chips. OpenAI, Google, Amazon, and plenty of other big tech players are building their own to get all the perks of custom silicon—faster performance, lower costs, and more control. It’s a clear sign that the industry is shifting in that direction.

While the initial chip test seems like baby steps, it could eventually become the backbone of its AI infrastructure by 2026. Will this shake up the AI hardware race? Maybe. But one thing’s for sure—Meta is betting big on controlling its own AI future.