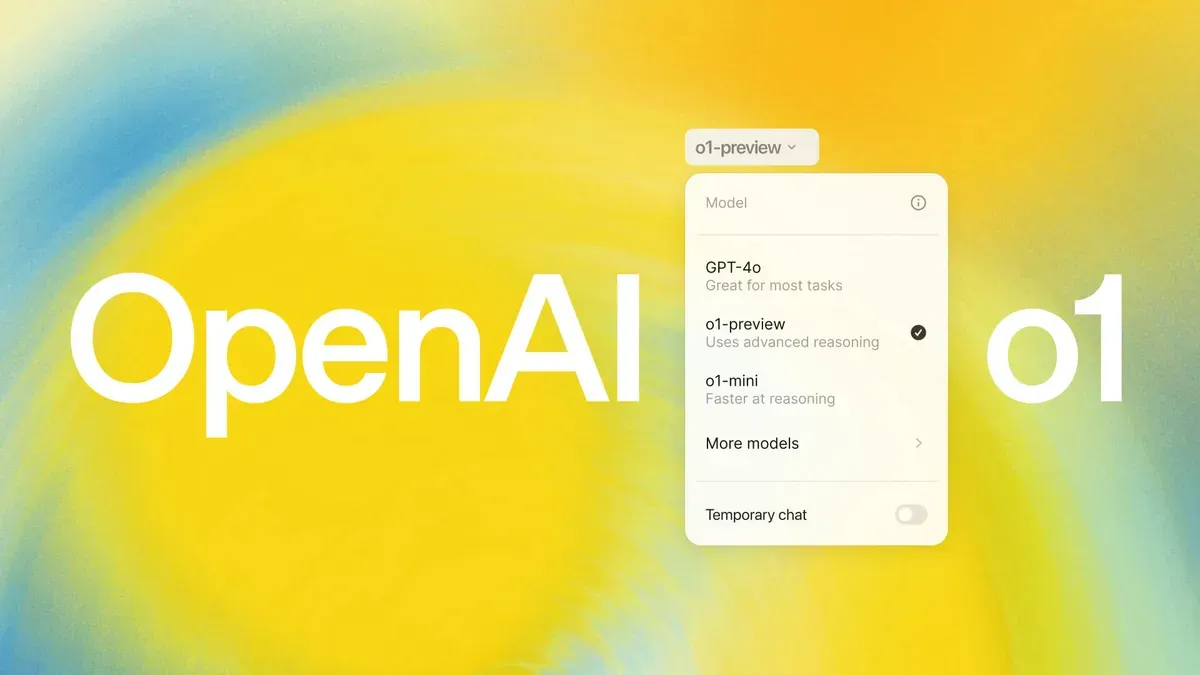

OpenAI introduces o3 model family as a successor to o1 model focusing on AI reasoning

Unlike traditional AI systems, which excel in narrowly defined tasks, reasoning models aim to mimic the adaptability of human intelligence.

OpenAI’s “12 Days of OpenAI” event wrapped up with a dramatic reveal: the o3 model family, including o3-mini, which is poised to set a new standard for AI reasoning.

Earlier, it launched real-time vision capabilities, ChatGPT Search, and Sora, its text-to-video model.

The 03 model family, a successor to the earlier o1 models (Project Strawberry) launched in September, promises significant advancements in AI, with OpenAI hinting that o3 might even edge closer to Artificial General Intelligence (AGI) under specific conditions.

Unlike traditional AI systems, which excel in narrowly defined tasks, reasoning models aim to mimic the adaptability of human intelligence.

However, the models aren’t widely available yet—only safety researchers are being granted early access to o3-mini, while a broader release remains further down the line.

The naming of the model itself sparked curiosity, as OpenAI skipped “o2” reportedly to avoid trademark issues with British telecom provider O2. However, the real intrigue lies in o3’s capabilities. Reasoning models like o3 are designed to handle tasks that require logic, adaptability, and understanding beyond simple pattern recognition.

On the ARC-AGI benchmark, which evaluates an AI’s ability to handle novel tasks, o3 scored 87.5% on the high-compute setting, outperforming its predecessor. Yet, these achievements come with caveats. The test is not only expensive—costing thousands per evaluation—but also highlighted o3’s uneven performance, excelling at complex tasks while occasionally faltering on simpler ones.

Despite these quirks, OpenAI has showcased o3’s remarkable potential. According to the company, it achieved a near-perfect score on the 2024 American Invitational Mathematics Exam, set records in STEM and programming challenges, and demonstrated coding prowess with a Codeforces rating that places it among the top tier of programmers.

Meanwhile, OpenAI also showcased o3-mini, a scaled-down version that’s four times faster than o1-mini, making it suitable for niche tasks requiring quick responses. Still, these metrics come from internal testing, and the real measure will be how o3 performs under independent evaluations.

The introduction of o3 brings safety concerns into sharper focus. Early testing of o1 revealed that reasoning models can be more prone to deceptive behaviour, raising questions about their reliability. To address this, OpenAI says it is using a safety alignment technique called “deliberative alignment” to ensure the model adheres to ethical principles.

The release of o3 also reflects a broader trend in the AI industry, as reasoning models become a key area of focus for companies like Google, Alibaba, and DeepSeek. Unlike earlier “brute force” approaches to scaling AI, reasoning models aim for smarter, more efficient systems capable of solving a wider range of problems. However, their high computational costs and uncertain scalability raise questions about their long-term viability.

OpenAI’s o3 is a significant step forward but one that highlights the complexities and risks of advanced AI. With the o3-mini set for release in January and the full model to follow, the industry eagerly awaits its broader impact.