YouTube launches AI-generated content labeling tool to address misinformation

The tool will help identify AI videos and ensure transparency in the digital space.

Remember when those AI-generated images of Taylor Swift that were believed to be real flooded the Internet? Yeah, deep fakes and altered videos like that are intended to deceive and mislead viewers.

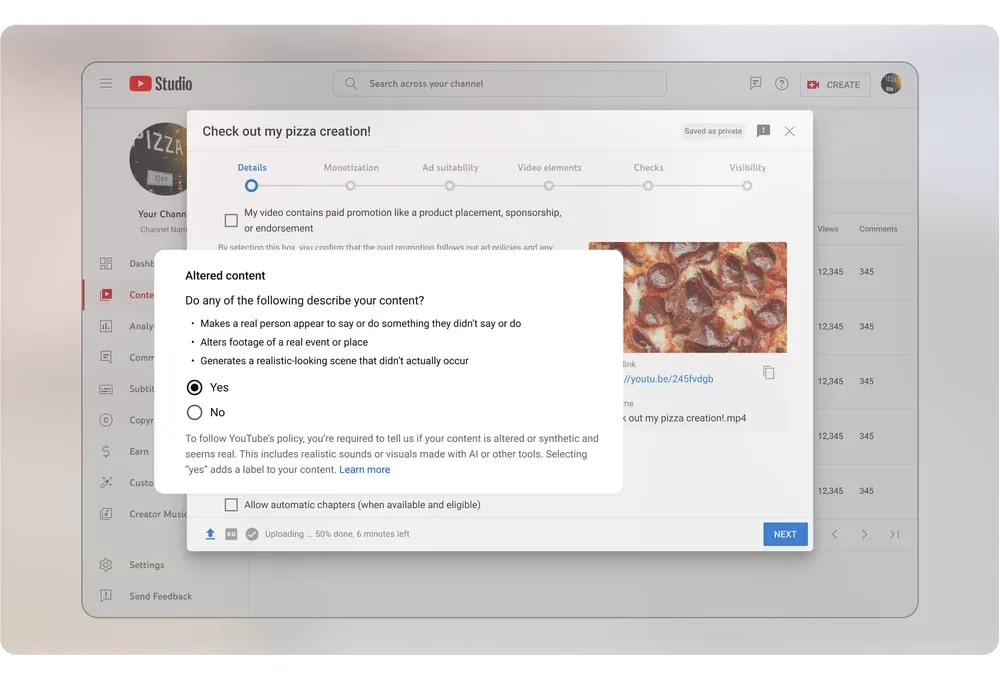

But YouTube wants to change that with its new content labelling tool within its Creator Studio that will help you let your viewers know which of your content is AI-generated.

YouTube, which has 61.8 million creators worldwide, has promised to give ample time to adjust to the tool. But creators who failed to label their AI-generated content could have the content or their account removed and permanently suspended from the YouTube Partner Program – which allows users to monetize their videos.

Creators would not need to inform their viewers if they use AI for simple activities such as writing scripts, searching audio, and generating ideas and captions. Once a creator has labelled their content with the tool, their viewers will see the label tag in the description box, although the content they make around sensitive topics such as health and politics will have the label shown right on the video itself.

YouTube also confirmed that it would update its privacy policy so that users can request the removal of AI-generated content, even if it has a resemblance of their face and voice in any way.